Utilize Multicore Processors with Python

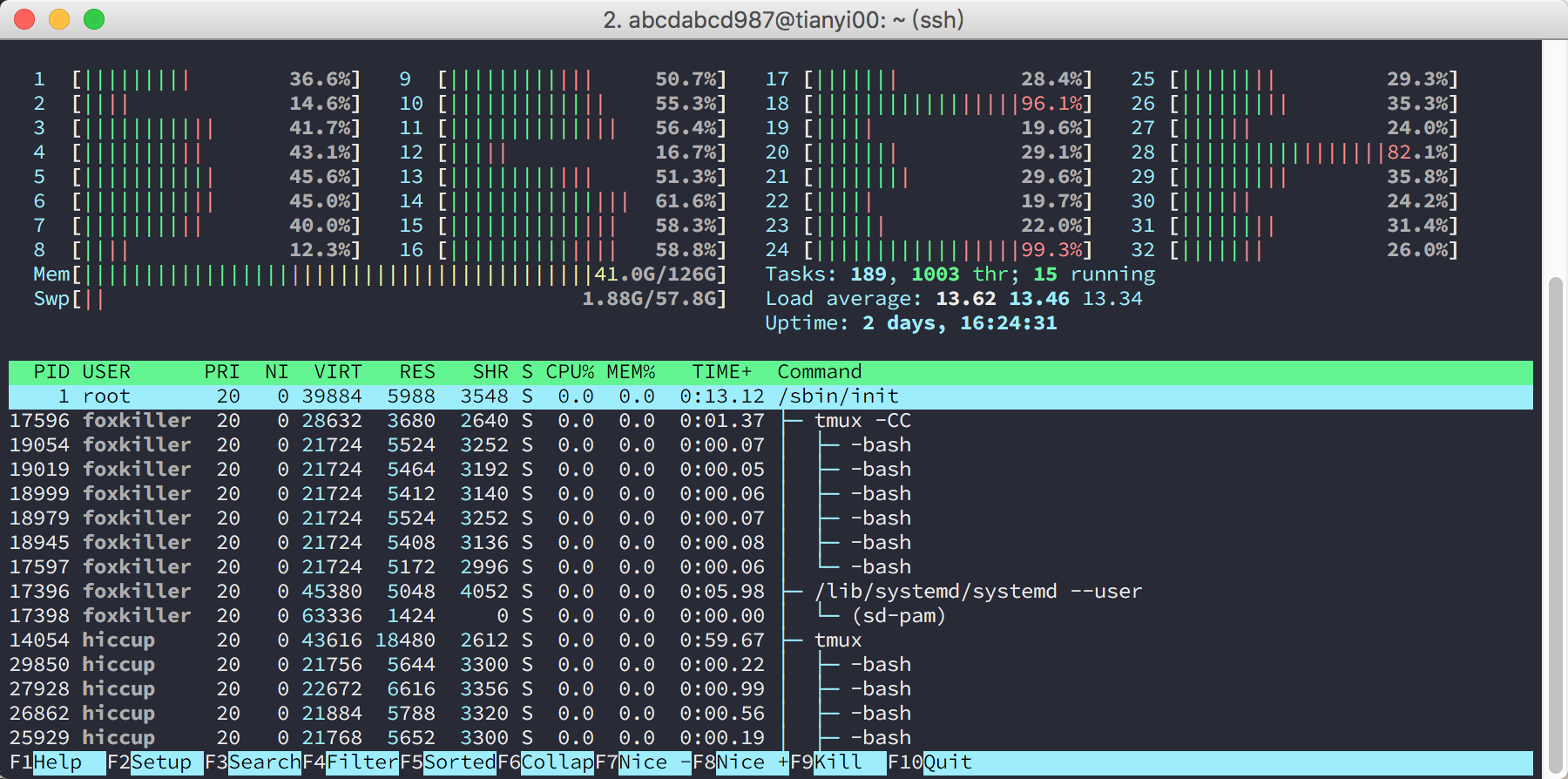

I used not to care about parallelizing small programs because I thought parallelizing them would cost me more time than writing the programs themselves, and also because there are only four cores on my laptop. Unless the task were IO intensive, I would leave it running with a single thread. Everything has changed since I have access to a machine with 32 cores and 128GB memory. Looking at all those idle cores from htop, I felt the urge to utilize them. And I found it’s super easy to parallel a Python program.

multiprocessing vs threading

The Python standard library covers large parts of daily needs and is very handy, which is one of the reasons why I love Python so much. There are two built-in libraries for parallelism, multiprocessing and threading. It’s a natural thought to use threads, especially if you have experiences with parallelism in other languages before. Threads are awesome. The cost of multithreading is relatively low, and threads share memories by default. However, I have to tell you the bad news: if you are using CPython implementation, using threading library for computation intensive tasks equals to saying goodbye to parallelism. In fact, it will run even slower than a single thread.

Global Interpreter Lock

The CPython I mentioned just now is an implementation of Python language by python.org. Yes, Python is a language, and there are lots of different implementations, such as PyPy, Jython, IronPython, and so on. CPython is the most widely used one. When people are talking about Python, they are talking about CPython for almost every time.

CPython uses Global Interpreter Lock (GIL) to simplify the implementation of the interpreter. GIL makes the interpreter only execute bytecodes from a single thread at the same time. Unless waiting for IO operations, the multithreading of CPython is entirely a lie! Of course, if you don’t use CPython and don’t have this GIL issue, you might consider threading again.

To know more about the GIL, you might be interested in the following materials:

multiprocessing.Pool

So, ruling out threading, let’s take a look at multiprocessing.

multiprocessing.Pool is a handy tool. If you can solve a task by ys = map(f, xs) which as we all know is a perfect form of parallelism, it’s super easy to make it parallel in Python. For example, square each element of a list:

import multiprocessing

def f(x):

return x * x

cores = multiprocessing.cpu_count()

pool = multiprocessing.Pool(processes=cores)

xs = range(5)

# method 1: map

print pool.map(f, xs) # prints [0, 1, 4, 9, 16]

# method 2: imap

for y in pool.imap(f, xs):

print y # 0, 1, 4, 9, 16, respectively

# method 3: imap_unordered

for y in pool.imap_unordered(f, xs):

print(y) # may be in any order

map returns a list. The two functions prefixed with i return an iterator. _unordered suffix means that it will be unordered.

When a task takes a long time, we might want to have a progress bar. Printing \r makes the cursor move back to the start of the line without going to the new line. We can have this:

cnt = 0

for y in pool.imap_unordered(f, xs):

sys.stdout.write('done %d/%d\r' % (cnt, len(xs)))

cnt += 1

# deal with y

Also, there is a handy library tqdm to handle the progress bar for you:

from tqdm import tqdm

for y in tqdm(pool.imap_unordered(f, xs)):

# deal with y

More Complicated Tasks

If the task is more complicated, you can use multiprocessing.Process to spawn subprocesses. There are several methods to have those subprocesses communicate with each other:

I strongly recommend Queue. Many applications fit into Producer-Comsumer Model. For example, you can have the parent create a Queue and make it one of the arguments when spawning children. Then, you can have the parent send tasks to children and children send back results via the Queue.

Watch Out When Importing Libraries

Some side effects might happen when importing libraries, such as global variables, open files, listening to sockets, creating threads, and so on. For example, if you import libraries that use CUDA, such as Theano and TensorFlow, and spawn a subprocess, things might go wrong:

could not retrieve CUDA device count: CUDA_ERROR_NOT_INITIALIZED

The solution is that don’t import those libraries in the parent and instead import them in subprocesses. Here is how you can do it with multiprocessing.Process:

import multiprocessing

def hello(taskq, resultq):

import tensorflow as tf

config = tf.ConfigProto()

config.gpu_options.allow_growth=True

sess = tf.Session(config=config)

while True:

name = taskq.get()

res = sess.run(tf.constant('hello ' + name))

resultq.put(res)

if __name__ == '__main__':

taskq = multiprocessing.Queue()

resultq = multiprocessing.Queue()

p = multiprocessing.Process(target=hello, args=(taskq, resultq))

p.start()

taskq.put('world')

taskq.put('abcdabcd987')

taskq.close()

print(resultq.get())

print(resultq.get())

p.terminate()

p.join()

And for multiprocessing.Pool, you can have an initializer function and pass it to the pool.

import multiprocessing

def init():

global tf

global sess

import tensorflow as tf

config = tf.ConfigProto()

config.gpu_options.allow_growth=True

sess = tf.Session(config=config)

def hello(name):

return sess.run(tf.constant('hello ' + name))

if __name__ == '__main__':

pool = multiprocessing.Pool(processes=2, initializer=init)

xs = ['world', 'abcdabcd987', 'Lequn Chen']

print pool.map(hello, xs)