Harnessing 3200Gbps Network (8): Bus Topology

In the previous chapter, we achieved a transmission speed of 97.844 Gbps on a single network card. In Chapter 2, we introduced that the best transmission speed is achieved by pairing GPUs with nearby network cards. Before we start using multiple network cards, we’ll first clarify the machine’s system topology in this chapter. We’ll name this program 8_topo.cpp.

Obtaining System Topology

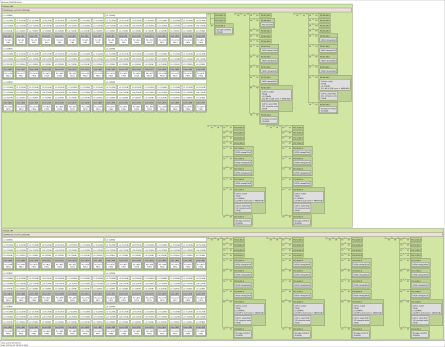

To obtain the system topology on Linux, you can use the hwloc library, which includes a command-line tool lstopo that can generate a system topology diagram. When we run lstopo, we can see the following topology diagram:

Simplified a bit, the system topology of AWS p5 instances looks like the following diagram:

We can see that the system has two CPUs, with each CPU connected to 4 PCIe switches. Each PCIe switch is mounted with 4 100 Gbps EFA network cards, 1 NVIDIA H100 GPU, and a 3.84 TB NVMe SSD.

Another way to obtain system topology is to use the lspci -tv command. We can see the addresses of each PCIe switch and the devices mounted under each PCIe switch. For example:

-+-[0000:bb]---00.0-[bc-cb]----00.0-[bd-cb]--+-01.0-[c6]----00.0 Amazon.com, Inc. Elastic Fabric Adapter (EFA)

| +-01.1-[c7]----00.0 Amazon.com, Inc. Elastic Fabric Adapter (EFA)

| +-01.2-[c8]----00.0 Amazon.com, Inc. Elastic Fabric Adapter (EFA)

| +-01.3-[c9]----00.0 Amazon.com, Inc. Elastic Fabric Adapter (EFA)

| +-01.4-[ca]----00.0 NVIDIA Corporation GH100 [H100 SXM5 80GB]

| \-01.5-[cb]----00.0 Amazon.com, Inc. NVMe SSD Controller

+-[0000:aa]---00.0-[ab-ba]----00.0-[ac-ba]--+-01.0-[b5]----00.0 Amazon.com, Inc. Elastic Fabric Adapter (EFA)

| +-01.1-[b6]----00.0 Amazon.com, Inc. Elastic Fabric Adapter (EFA)

| +-01.2-[b7]----00.0 Amazon.com, Inc. Elastic Fabric Adapter (EFA)

| +-01.3-[b8]----00.0 Amazon.com, Inc. Elastic Fabric Adapter (EFA)

| +-01.4-[b9]----00.0 NVIDIA Corporation GH100 [H100 SXM5 80GB]

| \-01.5-[ba]----00.0 Amazon.com, Inc. NVMe SSD Controller

...

In Linux, PCI addresses have the following format:

XXXX:BB:DD.F

XXXXis the PCI domain number, a 16-bit integer represented by 4 hexadecimal characters, usually0000.BBis the bus number, an 8-bit integer represented by 2 hexadecimal characters.DDis the device number, a 5-bit integer represented by 2 hexadecimal characters.Fis the function number, a 3-bit integer represented by 1 hexadecimal character.

The most common way to get the system topology in a program is to use the hwloc library, which is also the method used by aws-ofi-nccl. However, because I didn’t want to introduce additional dependencies, I chose to directly read the Linux /sys filesystem.

Linux sysfs

In the /sys/bus/pci/devices/ directory, all PCI devices are listed. Each PCI device is a symbolic link pointing to a path in /sys/devices/, and this path itself reflects the device’s topology structure. For example:

$ ls -la /sys/bus/pci/devices/

...

0000:b5:00.0 -> ../../../devices/pci0000:aa/0000:aa:00.0/0000:ab:00.0/0000:ac:01.0/0000:b5:00.0

0000:b6:00.0 -> ../../../devices/pci0000:aa/0000:aa:00.0/0000:ab:00.0/0000:ac:01.1/0000:b6:00.0

0000:b7:00.0 -> ../../../devices/pci0000:aa/0000:aa:00.0/0000:ab:00.0/0000:ac:01.2/0000:b7:00.0

0000:b8:00.0 -> ../../../devices/pci0000:aa/0000:aa:00.0/0000:ab:00.0/0000:ac:01.3/0000:b8:00.0

0000:b9:00.0 -> ../../../devices/pci0000:aa/0000:aa:00.0/0000:ab:00.0/0000:ac:01.4/0000:b9:00.0

0000:ba:00.0 -> ../../../devices/pci0000:aa/0000:aa:00.0/0000:ab:00.0/0000:ac:01.5/0000:ba:00.0

...

0000:c6:00.0 -> ../../../devices/pci0000:bb/0000:bb:00.0/0000:bc:00.0/0000:bd:01.0/0000:c6:00.0

0000:c7:00.0 -> ../../../devices/pci0000:bb/0000:bb:00.0/0000:bc:00.0/0000:bd:01.1/0000:c7:00.0

0000:c8:00.0 -> ../../../devices/pci0000:bb/0000:bb:00.0/0000:bc:00.0/0000:bd:01.2/0000:c8:00.0

0000:c9:00.0 -> ../../../devices/pci0000:bb/0000:bb:00.0/0000:bc:00.0/0000:bd:01.3/0000:c9:00.0

0000:ca:00.0 -> ../../../devices/pci0000:bb/0000:bb:00.0/0000:bc:00.0/0000:bd:01.4/0000:ca:00.0

0000:cb:00.0 -> ../../../devices/pci0000:bb/0000:bb:00.0/0000:bc:00.0/0000:bd:01.5/0000:cb:00.0

...

Comparing with the previous lspci -tv result, we can see that the GPU [ca] and the four network cards [c6] [c7] [c8] [c9] are all mounted under the PCIe switch [bd]. Through this symbolic link structure, we can construct the system’s topology.

Obtaining GPU PCI Addresses

Through the cudaGetDeviceProperties() function, we can obtain the GPU’s PCI address.

int num_gpus = 0;

CUDA_CHECK(cudaGetDeviceCount(&num_gpus));

for (int cuda_device = 0; cuda_device < num_gpus; ++cuda_device) {

char pci_addr[16];

cudaDeviceProp prop;

CUDA_CHECK(cudaGetDeviceProperties(&prop, cuda_device));

snprintf(pci_addr, sizeof(pci_addr), "%04x:%02x:%02x.0",

prop.pciDomainID, prop.pciBusID, prop.pciDeviceID);

// ...

}

Obtaining Network Card PCI Addresses

In the fi_info output at the end of Chapter 3, we can see the network card’s PCI address in the fi_info structure.

struct fi_info *info = GetInfo();

for (auto* fi = info; fi; fi = fi->next) {

char pci_addr[16];

snprintf(pci_addr, sizeof(pci_addr), "%04x:%02x:%02x.%d",

fi->nic->bus_attr->attr.pci.domain_id,

fi->nic->bus_attr->attr.pci.bus_id,

fi->nic->bus_attr->attr.pci.device_id,

fi->nic->bus_attr->attr.pci.function_id);

// ...

}

Obtaining CPU Topology

NUMA Node Information

When there are multiple NUMA nodes on a machine, it’s often necessary to consider NUMA issues when writing high-performance programs. Information about each NUMA node in the Linux system is stored in folders matching this regular expression:

/sys/devices/system/node/node[0-9]+/

For example:

$ ls -la /sys/devices/system/node/

total 0

drwxr-xr-x 5 root root 0 Oct 14 04:36 .

drwxr-xr-x 10 root root 0 Oct 14 04:36 ..

-r--r--r-- 1 root root 4096 Dec 31 22:04 has_cpu

-r--r--r-- 1 root root 4096 Dec 31 22:04 has_generic_initiator

-r--r--r-- 1 root root 4096 Dec 31 22:04 has_memory

-r--r--r-- 1 root root 4096 Dec 31 22:04 has_normal_memory

drwxr-xr-x 4 root root 0 Oct 14 04:36 node0

drwxr-xr-x 4 root root 0 Oct 14 04:36 node1

-r--r--r-- 1 root root 4096 Dec 3 02:39 online

-r--r--r-- 1 root root 4096 Dec 31 22:04 possible

drwxr-xr-x 2 root root 0 Dec 31 20:45 power

-rw-r--r-- 1 root root 4096 Oct 14 04:36 uevent

Here we can see that the system has 2 NUMA nodes.

CPU Core Information

The CPU numbers under each NUMA node are located in folders matching this regular expression:

/sys/devices/system/node/node[0-9]+/cpu[0-9]+/

For example:

$ ls -la /sys/devices/system/node/node1/cpu*

lrwxrwxrwx 1 root root 0 Dec 31 20:45 /sys/devices/system/node/node1/cpu48 -> ../../cpu/cpu48

lrwxrwxrwx 1 root root 0 Dec 31 20:45 /sys/devices/system/node/node1/cpu49 -> ../../cpu/cpu49

lrwxrwxrwx 1 root root 0 Dec 31 20:45 /sys/devices/system/node/node1/cpu50 -> ../../cpu/cpu50

lrwxrwxrwx 1 root root 0 Dec 31 20:45 /sys/devices/system/node/node1/cpu51 -> ../../cpu/cpu51

lrwxrwxrwx 1 root root 0 Dec 31 20:45 /sys/devices/system/node/node1/cpu52 -> ../../cpu/cpu52

...

-r--r--r-- 1 root root 28672 Dec 31 22:07 /sys/devices/system/node/node1/cpulist

-r--r--r-- 1 root root 4096 Dec 3 02:39 /sys/devices/system/node/node1/cpumap

CPU Hyperthreading Information

For many high-performance programs, using hyperthreading can actually reduce performance. Here we can further filter out the CPU physical core numbers. The files in the following path contain the logical core numbers that share the same physical core as this CPU core:

/sys/devices/system/node/node[0-9]+/cpu[0-9]+/topology/thread_siblings_list

If thread_siblings_list contains only one number, then this CPU core is a physical core. For example:

$ cat /sys/devices/system/node/node1/cpu89/topology/thread_siblings_list

89

Because AWS p5 instances have hyperthreading disabled by default, we see only one number in thread_siblings_list.

PCI Device NUMA Node Information

The NUMA node to which a PCI device is mounted can be obtained from the file at the following path:

/sys/bus/pci/devices/XXXX:BB:DD.F/numa_node

For example:

$ cat '/sys/bus/pci/devices/0000:53:00.0/numa_node'

0

$ cat '/sys/bus/pci/devices/0000:ca:00.0/numa_node'

1

Program Interface

struct TopologyGroup {

int cuda_device;

int numa;

std::vector<struct fi_info *> fi_infos;

std::vector<int> cpus;

};

std::vector<TopologyGroup> DetectTopo(struct fi_info *info);

We write a function DetectTopo() to obtain the system topology, grouping GPUs, network cards, and physical CPU cores. This function returns a TopologyGroup structure for each GPU. The structure includes the GPU’s number, NUMA node number, network card fi_info structure pointers, and CPU core numbers. We evenly distribute all CPU physical cores in the same NUMA node to each GPU. Because the code written in C++ is too ugly, I won’t display it in the article.

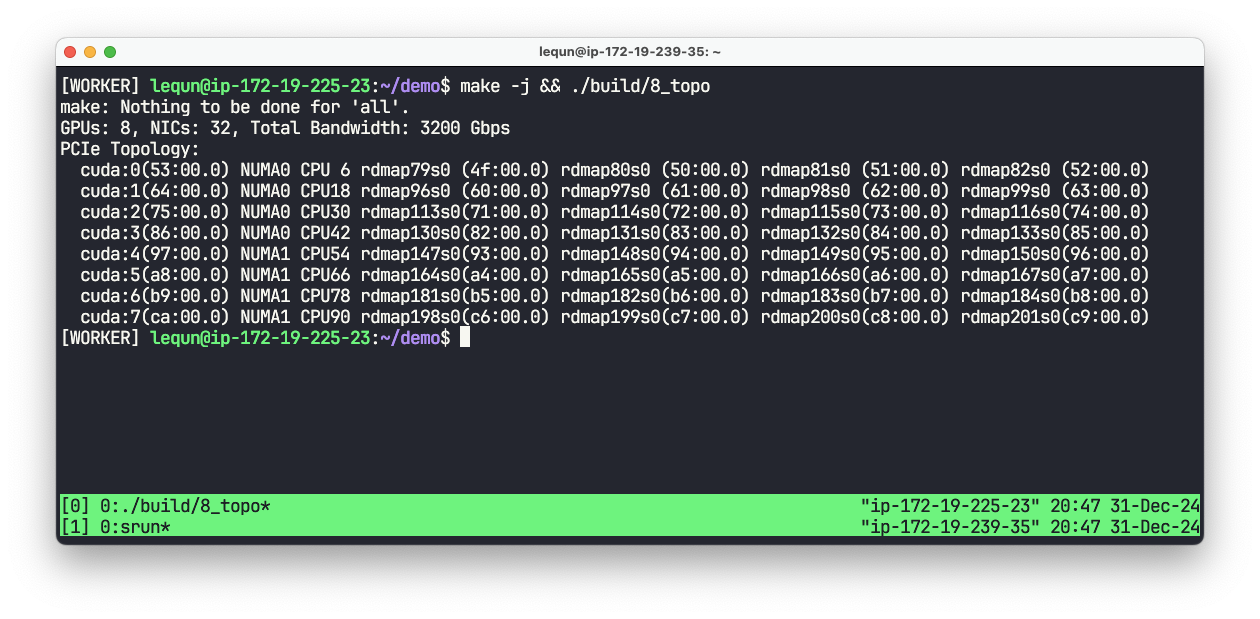

Results

Chapter code: https://github.com/abcdabcd987/libfabric-efa-demo/blob/master/src/8_topo.cpp