Harnessing 3200 Gbps Network (1): RDMA and EFA

Before we start writing code to operate the 3200 Gbps network, let’s understand the hardware, software, and system design philosophy required to drive this high-performance network.

RDMA

Most networks we use daily are based on TCP/IP protocols. Applications interact with the Linux kernel through sockets. The Linux kernel maintains a complete TCP/IP protocol stack and is responsible for controlling the Network Interface Controller (NIC). Two NICs communicate at the network layer through Ethernet. The most common examples are gigabit NICs (1 Gbps) in home computers and 10-gigabit NICs (10 Gbps) in servers.

High-performance networks, on the other hand, use a completely different hardware and software stack called RDMA (Remote Direct Memory Access).

- Application Layer: Applications typically interact with RDMA NICs through the ibverbs API (also known as verbs API). The verbs API is very different from sockets, with completely different system design philosophies, which we’ll discuss later.

- Protocol Layer: Unlike TCP/IP, RDMA has its own protocol suite. Since I don’t research this area, I’m not familiar with the technical details. Perhaps the most intuitive difference is that RDMA doesn’t use “IP addresses” and “MAC addresses” but has its own addressing system.

- Network Layer: The most common transport layers for RDMA are InfiniBand and RoCE. InfiniBand is a proprietary protocol - expensive but stable. RoCE (RDMA over Converged Ethernet) offers better price-performance ratio but requires careful tuning, and many tech giants have published numerous papers about RoCE.

- Network Cards: The most common RDMA NICs are Mellanox’s ConnectX series. After NVIDIA acquired Mellanox in 2020, it validated the old internet meme about “NVIDIA’s fast network speed”. The ConnectX-7 generation achieves 400 Gbps and is often paired with H100 generation GPUs.

As its name suggests, RDMA can directly read and write remote (and local) memory. The memory here isn’t limited to CPU-bound memory but can also be memory of other devices on the PCIe bus, such as GPU memory. Compared to socket’s send() and recv() operations, RDMA offers richer operations. RDMA operations can be divided into two categories: Two-sided RDMA and One-sided RDMA:

- Two-sided RDMA: Requires CPU participation from both communicating parties

RECV: Target side prepares to receive messageSEND: Initiator sends message to target

- One-sided RDMA: Only requires CPU participation from the initiator, target CPU is unaware

WRITE: Write data directly from initiator’s memory to target’s memoryWRITE_IMM: Similar toWRITE, but additionally inserts an integer into target’s Completion Queue to notify target CPU

READ: Read data directly from target’s memory into initiator’s memoryATOMIC: Perform atomic operations on target’s memory, like Compare-and-Swap, Fetch-Add

In RDMA, a pair of communicating nodes is called a Queue Pair (QP). Similar to TCP and UDP in IP protocol, RDMA has three transport types:

- Reliable Connected Queue Pair (RC QP)

- Connection-based, requiring connection establishment before communication. Usually has connection limits.

- Reliable transmission with retransmission, guarantees message delivery order.

- Can transmit large messages, far exceeding MTU size.

- Supports all RDMA operations:

RECV,SEND,WRITE,READ,ATOMIC

- Unreliable Connected Queue Pair (UC QP)

- Similar to RC, but transmission is unreliable, no guaranteed delivery or order.

- Supports only some RDMA operations:

RECV,SEND,WRITE

- Unreliable Datagram Queue Pair (UD QP)

- No connection required.

- No guaranteed delivery or order.

- Messages must be smaller than MTU

- Only supports

RECV,SEND

AWS EFA

Obviously, most individuals and even companies can’t afford NVIDIA HGX clusters, so they rely on cloud service providers. Cloud providers generally prefer not to expose hardware directly to tenants, instead adding a virtualization layer. On AWS p5 instances, AWS provides a virtual high-performance NIC called EFA (Elastic Fabric Adapter).

We don’t know whether EFA uses Amazon’s custom NICs or standardized products underneath, whether the network protocol is InfiniBand or RoCE, or whether the physical medium is fiber optic or copper. Of course, we don’t need to know - we can contact support if there are issues. Interestingly, EFA uses Amazon’s custom protocol SRD (Scalable Reliable Datagram).

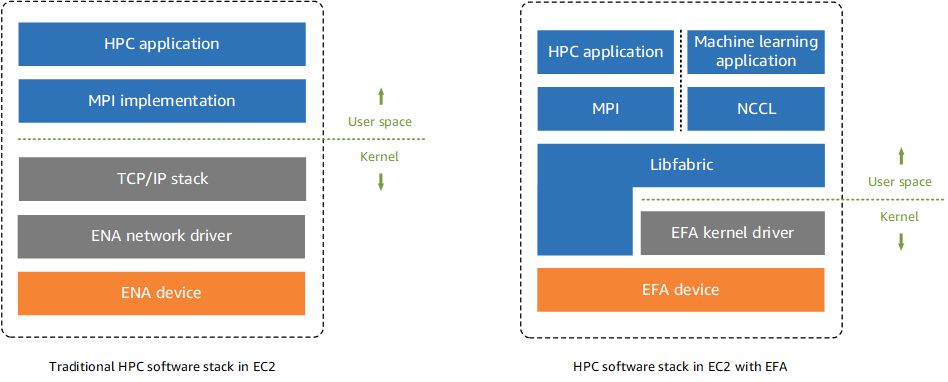

So how should applications use EFA? The EFA documentation includes this diagram:

From this diagram and documentation, we can see that we need to use libfabric to work with EFA.